Spark Installation On OS X

這次的心得分享為安裝spark和使用mllib的範例,那就分兩個部分介紹吧!

安裝Spark

透過Homebrew安裝最新版本的Spark

$brew install apache-spark

安裝完後,理論上已經可以執行spark-shell了,不過我們再修改一下log的級別,以減少冗長的log。首先先進去spark的路徑,例如我的為

/usr/local/Cellar/apache-spark/1.2.0/libexec/conf (1.2.0為Spark版本)

$cd /usr/local/Cellar/apache-spark/1.2.0/libexec/conf

複製log4j模板進上述的路徑

$cp log4j.properties.template log4j.properties

接著修改log4j.properties檔

$vim log4j.properties

將 INFO 改為 ERROR

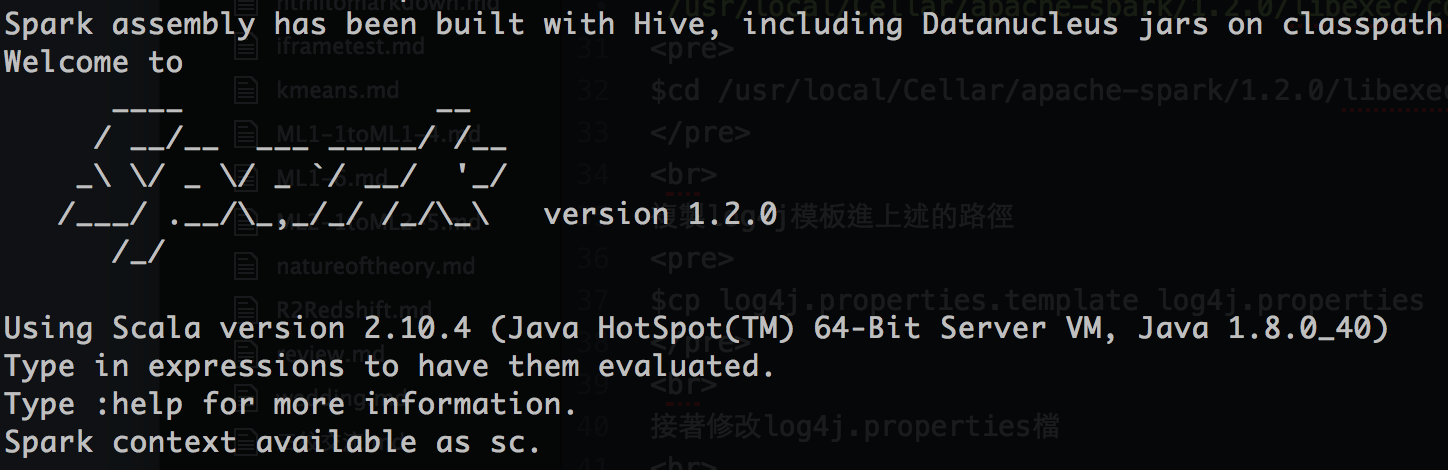

log4j.rootCategory=ERROR, console然後就可以執行看看spark-shell了!

$cd /usr/local/Cellar/apache-spark/1.2.0 $./bin/spark-shell

MLlib Demo

以ALS為例,首先安裝相依套件

import org.apache.spark.mllib.recommendation.ALS import org.apache.spark.mllib.recommendation.MatrixFactorizationModel import org.apache.spark.mllib.recommendation.Ratingval data = sc.textFile("libexec/data/mllib/als/ydtest.data") val ratings = data.map(_.split(',') match { case Array(user, item, rate) => Rating(user.toInt, item.toInt, rate.toDouble) })

// Build the recommendation model using ALS val rank = 10 val numIterations = 10 val model = ALS.train(ratings, rank, numIterations, 0.01)

// Evaluate the model on rating data val usersProducts = ratings.map { case Rating(user, product, rate) => (user, product) } val predictions = model.predict(usersProducts).map { case Rating(user, product, rate) => ((user, product), rate) } val ratesAndPreds = ratings.map { case Rating(user, product, rate) => ((user, product), rate) }.join(predictions) val MSE = ratesAndPreds.map { case ((user, product), (r1, r2)) => val err = (r1 - r2) err * err }.mean() println("Mean Squared Error = " + MSE)

比較要注意的是資料輸入的路徑,如果是用

$spark-shell進入的話,路徑需設為絕對路徑。